I joined Gem 4 months ago as the Senior Director of Recruiting Operations—indeed, as its first Recruiting Ops hire. I came from HubSpot, where I’d built the Recruiting Ops team from scratch; and I was excited to grow an Ops team again with the lessons I’d learned the first time in hand. A lot falls under the purview of Talent Operations: process improvements, data de-siloing, programs, analytics and strategy, training and change management. But one of my first projects at Gem has been building out a v.1 of our recruiting capacity model, and I’d like to walk through how we’re doing it here for anyone who’s taking on an Ops role—whether formally or informally. It’s a project worth prioritizing, because the more accurate your capacity model, the more predictability you have around whether you can hit the hiring goals the business is asking of you. And that affects everything.

The most ideal time for a recruiting organization to start thinking about a capacity model is as soon as possible. I say this because it’s nearly impossible to build a team without this data—or at least without a best guess at this data, which is where most capacity models begin out of necessity. I’ve seen teams try to fly the recruiting airplane without one, but what they end up spending on resources isn’t ultimately aligned with ideal output. Every time the business asks the recruiting team to move faster, the answer is: Well, recruiting needs more heads. But the reality is that the market is finite, and “more heads” isn’t always the most effective or economical response. Maybe it’s automation. Maybe it’s more thoughtful training or enablement. Maybe it’s investing in talent brand. So often there are other levers to pull than additional recruiting headcount. But without the data, it’s tough to know if you currently have the resources to meet the demand—let alone to strategize how to fill the gaps.

Gathering historical, contextual, and anecdotal data for a directionally-correct recruiting capacity model

Gem had a kind of rudimentary capacity model before I arrived, which is what forward-thinking teams try to put in place early on, even when they don’t have an Ops function to dedicate time to it. It was like: Hey, I feel like these people can make this number of hires. Often this is the gut-feel data Talent Ops folks inherit when they come into an organization. It’s anecdotal, based on how recruiters have seen folks perform at their organization or at previous organizations. So on my end, step one was to aggregate some data to get us to a more directionally-correct place.

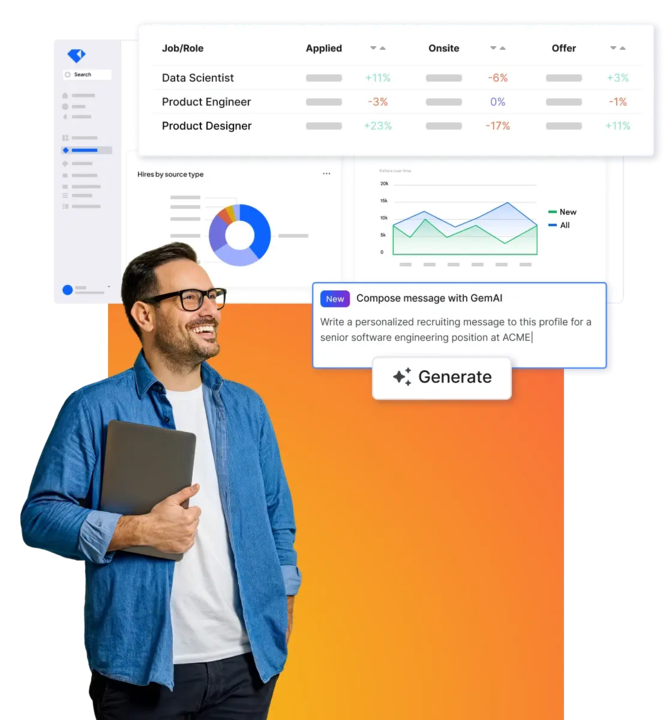

Spoiler alert for those of you creating a recruiting capacity model for the first time: the data will likely be fragmented, and the initial process will likely be very manual. (I’m currently tracking it in spreadsheets.) It’s not news that there are a lot of workarounds recruiters have to do just to get their jobs done. Data points exist across your tech stack—for us, they’re in our ATS and in Gem; and having Gem in place meant that most of the core data I needed is already exposed and easy to access. There’s no easy jump from having nothing to having a fully built-out data model. The point is to start somewhere.

There are a few places I get that data early on. The first is a combination of historical data I’ve pulled alongside conversations with recruiting managers for context—initially at Gem, we looked at hires made by each recruiter and broke it down by department. I bring them the data and say, Here are the actual numbers; is this still a reasonable output?

That word “still” is important because you don’t want to normalize the extraordinary. Here’s an example of what I mean by that: The first two recruiters in an organization are going to have extraordinary output. They’re hiring everyone between the two of them; there are things they can do more nimbly because they’re a small team; the two of them are working incredibly hard and are overextended in those early stages of the business. But as the team grows, there’s a kind of law of diminishing returns. That kind of output isn’t sustainable unless you plan on burning your recruiters out. As you grow, your rules of engagement get more accurate, recruiters spend more time on long-term candidate nurture. All that factors into the fact that the ten new recruiters you hire simply won’t have the output of the first two. So I always optimize for context. Don’t assume that if a recruiter made x number of hires last quarter or last year, they can do exactly that again. They and their managers know the context of that activity. So have the conversations and get the qualitative data alongside the quantitative data you bring them.

The second place I go for data is a combination of standard market benchmarks, anecdotal numbers from my peers, and previous experience in my own recruiting career. (Again, it’s not the most accurate data, but it’s directionally correct, and that’s the point of a v.1 capacity model—to turn you in the right direction. Recruiting capacity models necessarily get better with more inputs, over time.) What have I goaled in previous organizations? I’ll gut-check those numbers with my peers—How many engineers is your team responsible for hiring?—as a way of making sure I’m in the ballpark.

So for the moment, that’s my watermark—the line I draw in the sand—arrived at from a combination of historical data, qualitative context, and anecdotal evidence. In time, we’ll have wholly empirical data that will provide the framework for a data-driven capacity model by resource. That’s what we’re striving for—not only for ourselves, but for our customers—directionally-accurate capacity models across industry verticals and talent profiles. Imagine having the data to benchmark your capacity models by industry, by company size, and so on. That’s where Gem is ultimately headed.

Determining capacity model inputs, and building in assumptions and buffers

The questions I asked for initial inputs into our v.1 were: How many high-level resources do we have? That included recruiters; it included sourcers. What was their historical output, and were those exceptional results or results we thought they could replicate? We also built in some assumptions to make the model a bit more conservative. We built in a PTO assumption—no one will be working at 100% every day (Gem has unlimited vacation days and we want our employees to enjoy them), so we included a 10% buffer such that every resource is effectively expected to be operating at 90%. We also built in buffers around the number of resources we have, or expect to have. We account for ramp time (3 months per resource). We account for leadership (we want our leaders to lead). For example, in the past, Nat and Swish (Nathalie Grandy and Justin Swisher, Technical Recruiting Manager and Head of Business Recruiting) were in the model and responsible for hiring. We took them out this year as an additional buffer. That left us a number we felt pretty confident our recruiters can hit.

If we’d had a longer runway for the Q2 model, we could’ve been more granular than we were upfront. We’ll get more in the weeds—and the model will get more nuanced—over time. In v.2 I’ll be including seniority: What’s the title of the recruiting resource we’re considering? I’ll be separating out by resource to tell the story about what a recruiter can do versus what a sourcer can influence. I’ll be thinking about the tenure of the recruiter: If someone has been at Gem for a year versus three years, how does that change their potential impact? I’ll be thinking about source of hire, because a passively-sourced candidate will take more effort than a referral or an inbound applicant will. I’ll be asking whether a role is technical or non-technical, regardless of where in the business it sits. What’s the historical offer-accept rate for the role? Is the role evergreen or niche? Where is it located? (Hiring in Europe during the summer can be as difficult as hiring in the U.S. during December.) Is hiring frontloaded at the beginning of the year? How will seasonality impact capacity?

Normalizing what’s predictable to diagnose and course-correct when output drops

Hiring is multidimensional, and there are so many factors that can impact output. The point is not to have a model that’s so predictive that we have to-the-hire accuracy. The point, ultimately, is to normalize what’s predictable. Let’s normalize output by recruiting resource—whether they’re a sourcer or a recruiter—and by level. If you can normalize those things, everything else you do as a TA leader or a Recruiting Ops professional is around optimizing output. For example, we might normalize that an L2 recruiter can deliver five SDRs a month. That’s a line we might draw after seeing its predictability over a period of three quarters. What happens, then, in Q4, when we see a significant drop in hiring output? Because we’ve normalized a baseline, we can ask why. Maybe offer-accept rates look typical. Maybe our passthrough rates are as healthy as ever. So what gives? Maybe we see that response and engagement rates have dropped. Maybe we realize it’s a seasonal issue—in our Q4 we have nearly four holiday weeks. Q4 is naturally a down quarter. And that's the reason output dipped. Now we have data for an even more accurate model the next time around.

In other words, the goal of a recruiting capacity model is, of course, predictability. But it also creates an environment in which TA can more easily diagnose the source of problems. Say we open an office in New York. We apply our capacity model there, but we see that recruiters aren’t seeing the same output for New York roles as they are for Bay Area roles. We dig in and see that we have a lower offer-accept rate in New York, and we realize it’s because the brand isn’t there. That level of insight and optimization is the holy grail of a recruiting capacity model. Where has your output strayed from what you’ve normalized? And how do you course-correct from there?

My advice is to normalize the data on each individual resource. You can generally get a pretty good sense of output by resource over a period of a year—even better over two years, because now you have historical data on seasonality. What’s more, as the team grows, your evidence won’t just be based on a single resource. You’ll have more professionals in the role and you can say with a higher level of confidence, Yes, this is the average.

Even as the model gets more accurate—and more complex—with more inputs, in future quarters I’ll be reporting out on the accuracy of previous models. In Q3 I’ll be asking of my Q2 model: How accurate was this? Was I 60% accurate? Was I 90% accurate? Where did we fall short in terms of planning? The answers to those questions will inform the next iteration of the model. Over time we’ll have a standard annual capacity plan—a yearly predictive model—that we’ll return to and tweak and get more granular with on a quarterly basis, as we add or lose resources, as the business changes, and so on.

Recruiting capacity models should be looked at quarterly and in perpetuity. Businesses morph, brands change, both internal external circumstances will impact your company and therefore your recruiting team’s output. Keep returning to your model, and keep normalizing. I know we plan to do the same at Gem, and I look forward to updating you on the journey.

Share

Related posts

November 7, 2025

Flat teams, growing demands: What our 2025 recruiting survey reveals

October 24, 2025

How to build an effective recruiting capacity plan

August 26, 2025

Why Gem's Workday ATS integration is different and better

Your resource for all-things recruiting

Looking for the latest data, insights, and best practices? Welcome to the Gem blog. We've got you covered.